With the recent advances in artificial intelligence, Google has been working to apply a form of high-level AI computing known as deep learning to the field of medicine and health care.

Though further developments are underway, Google said Thursday that it has successfully developed new deep learning algorithms that can detect and diagnose diabetic retinopathy, an eye disease which can lead to blindness, as well as locate breast cancer.

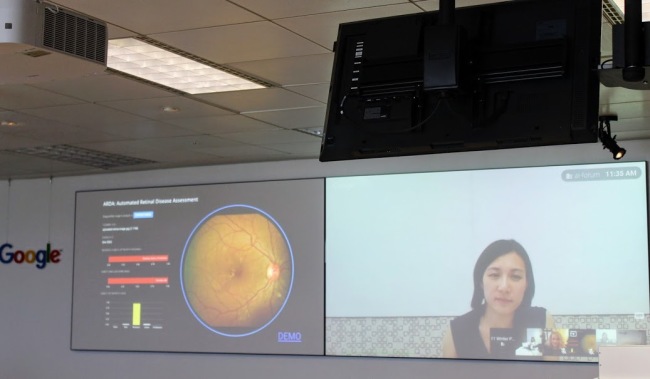

Lily Peng, product manager of the medical imaging team at Google Research, shared how the US tech giant is using deep learning to train machines to analyze medical images and automatically detect pathological cues, be it swollen blood vessels in the eye or cancerous tumors, during a video conference with the South Korean media hosted by Google Korea.

(123RF)

Based on the workings of the human brain, deep learning uses large artificial neural networks -- layers of interconnected nodes -- that rearrange themselves as new information comes in, allowing computers to self-learn without the need for human programming.

“Artificial neural networks have been around since the 1960s. But now with more powerful computing power, we can build more layers into the system to handle more complicated tasks with high accuracy,” Peng said.

“In deep learning, the feature engineering is handled by the computer itself. We don’t tell the networks what the feature is, but we give them lots of examples, and have the network itself determine what these features are on their own.”

On the medical front, Google has made significant progress on building an algorithm to read retinal scan images to discern signs of diabetic retinopathy, the fastest growing cause of preventable blindness in the world.

Diabetic retinopathy can be detected through regular eye exams. Doctors pictures of patients’ eyes with a special camera and check for the disease based on the amount of visible hemorrhages.

“Unfortunately in many parts of the world, there are not enough doctors to do this grading,” Peng said. In India, there is a shortage of 127,000 eye doctors while 45 percent of patients suffer vision loss before diagnosis,” said Peng, who is also a clinical scientist.

Therefore, Google designed an AI algorithm to analyze retinal images and identify features of diabetic retinopathy. The program was exposed to 128,000 retinal images labeled by 54 ophthalmologists. Labeling took place multiple times for each image to account for diagnostic variability among doctors, it said.

As the algorithm has shown high accuracy, Google has now moved to build an interface and hardware into which doctors in India can input a retinal image and immediately receive a grade for diabetic retinopathy. The firm continues to optimize its machine to improve user convenience and plans to conduct more clinical trials to secure regulatory approval, according to Peng.

Lily Peng, product manager of the medical imaging team at Google Research, speaks during a video conference organized by Google Korea on Thursday. (Google Korea)

Another field spearheading Google’s deep learning push is cancer detection. The tech giant is developing another deep learning algorithm that surveys biopsy images to locate metastatic breast cancer that has spread to the lymph nodes.

“What we’re trying to do is find out where there is a little bit of breast cancer in the lymph node, which helps determine what stage of cancer the patient is in and guides treatment,” Peng said.

Google’s AI algorithm achieved a tumor localization score -- how accurately it can locate the cancerous tumor -- of 0.89, exceeding the score of 0.73 from a highly-trained human pathologist with unlimited time for examination.

Peng, however, pointed to the algorithm’s shortcomings in its biopsy readings. The algorithm was tested against slides containing 10,000 to 400,000 images, of which 20 to 150,000 showed tumors. Google’s algorithm located tumors with a sensitivity of 92 percent, but this was when set to allow eight false positive readings per slide.

On the other hand, human doctors had a locational sensitivity of 73 percent, but zero false positives -- meaning they were less efficient at finding tumors but never identified normal tissue as a tumor.

“If you combine the machine and the pathologist reading the slide, you’d have a very optimal situation where you have low false negative (diagnoses) and high sensitivity (for tumor location),” Peng said.

The Google researcher said it will take some time before devices running on Google’s deep learning algorithms are commercialized for use in the medical sector, as it must secure sufficient clinical data proving their efficacy and accuracy before seeking regulatory approval.

On concerns that Google’s next-generation medical image analysis tools could be subject to tougher regulations given their novelty, Peng said that the US Food and Drug Administration holds a “neutral” or “objective” approach to the devices, as they fall within an existing product category.

The US FDA considers the new devices simply as upgraded versions of existing medical imaging and processing devices that can be approved as long as their claimed functions are backed by sufficient evidence, she said.

By Sohn Ji-young (

jys@heraldcorp.com)