Researchers at the Korea Advanced Institute of Science and Technology said Monday they succeeded in developing a brain-machine interface system which interprets brain signals to allow patients to control robot hand movements only by thought.

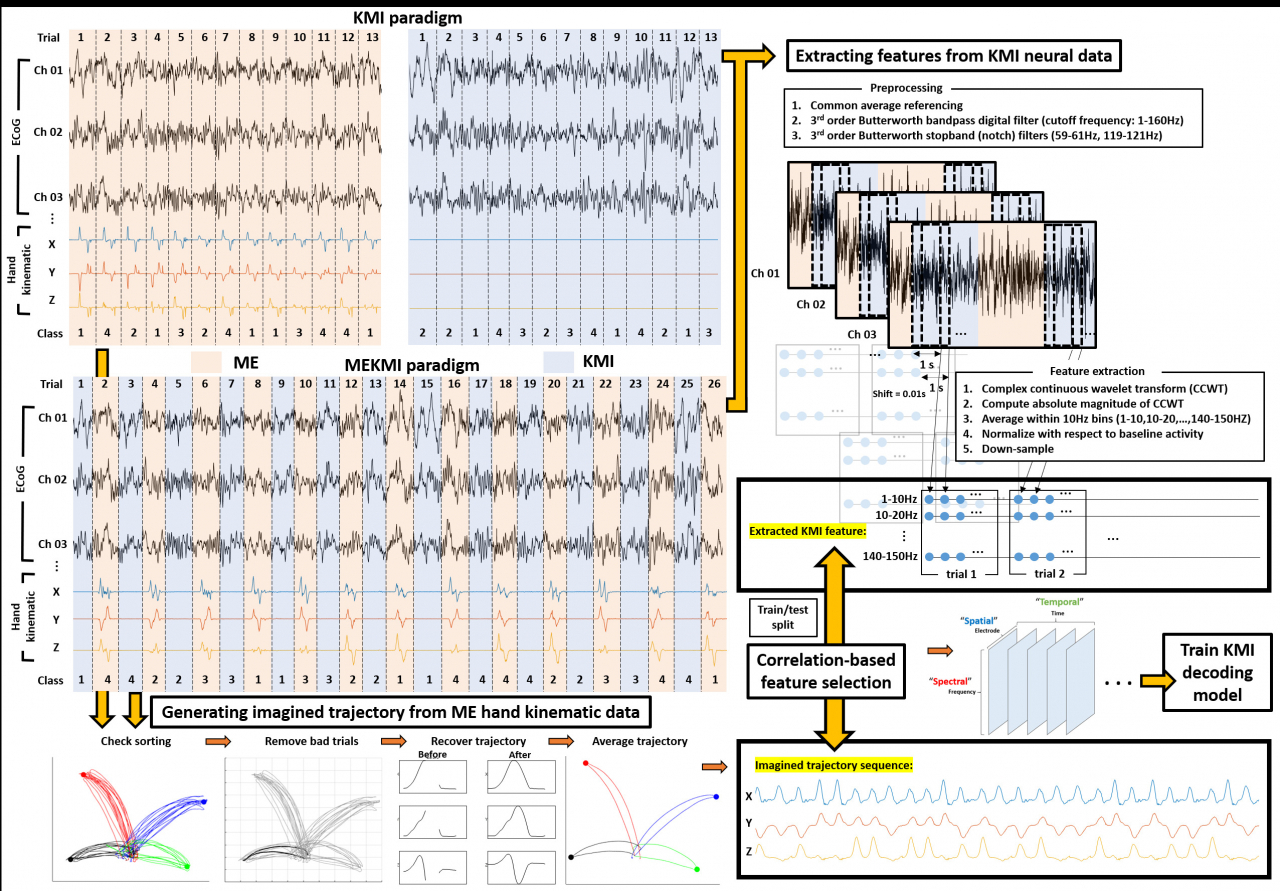

By studying the electrocorticograms of epilepsy patients when they imagine hand movements, research teams led by KAIST’s brain and cognitive engineering professor Jeong Jae-seung and Seoul National University’s Medical School professor Chung Chun-kee were able to develop the hand movement trajectory imagination cognitive signal decoding technology.

The new decoding technology analyzes the electric signals sent off by the brain when patients imagine hand movements. It then uses artificial intelligence analysis and machine learning to decode the intended movement.

According to the researchers, the new technology will assist amputees and disabled patients to move things without having to go through a lengthy training required for prosthetics.

“The results are innovative as it allows the disabled to control robot hands without having to receive lengthy training after going through tailored analyses of their unique brain signals. We hope it will greatly contribute to the commercialization of robot hands as substitutes for prosthetics,” said professor Jeong of KAIST.

Prior research had mostly been limited to decoding brain signals sent off during real hand movements, and imagined movements of other body parts, the scientists explained in a press release.

However, the analysis of real hand movements is inapplicable in creating an interface for amputees and paralysis patients to use, as they cannot actually move their hands. Moreover, interfaces developed from analyzing the imagined movements of other body parts require patients to go through extensive training as it produces unnatural and unintended hand movements, the joint research team added.

With the new decoding technology, researchers were able to calculate, decode and predict trajectories of complex imagined hand movements with a 80 percent accuracy.

"This study demonstrated a high accuracy of prediction for the trajectories of imagined hand movement, and more importantly, a higher decoding accuracy of the imagined trajectories," the scientists wrote in their study published in the Journal of Neural Engineering.

The study was carried out as a follow-up to an earlier research published in February, and was funded by the National Research Foundation of Korea.

![[KH Explains] How should Korea adjust its trade defenses against Chinese EVs?](http://res.heraldm.com/phpwas/restmb_idxmake.php?idx=645&simg=/content/image/2024/04/15/20240415050562_0.jpg&u=20240415144419)